Containers

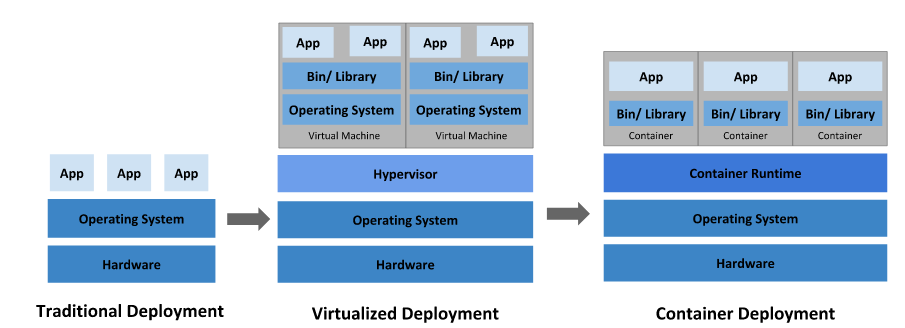

Containers virtualize at the operating system level, Hypervisors virtualize at the hardware level.

Hypervisors abstract the operating system from hardware, containers abstract the application from the operation system.

Hypervisors consumes storage space for each instance. Containers use a single storage space plus smaller deltas for each layer and thus are much more efficient.

Containers can boot and be application-ready in less than 500ms and creates new designs opportunities for rapid scaling. Hypervisors boot according to the OS typically 20 seconds, depending on storage speed.

Containers have built-in and high value APIs for cloud orchestration. Hypervisors have lower quality APIs that have limited cloud orchestration value.

linuxcontainers

linuxcontainers.org is the umbrella project behind LXC, LXD and LXCFS.

The goal is to offer a distro and vendor neutral environment for the development of Linux container technologies.

Our main focus is system containers. That is, containers which offer an environment as close as possible as the one you'd get from a VM but without the overhead that comes with running a separate kernel and simulating all the hardware.

LXC

LXC is the well known set of tools, templates, library and language bindings. It's pretty low level, very flexible and covers just about every containment feature supported by the upstream kernel.

LXC is production ready with LTS releases coming with 5 years of security and bugfix updates.

LXD

LXD is the new LXC experience. It offers a completely fresh and intuitive user experience with a single command line tool to manage your containers. Containers can be managed over the network in a transparent way through a REST API. It also works with large scale deployments by integrating with cloud platforms like OpenNebula and OpenStack.

LXCFS

Userspace (FUSE) filesystem offering two main things:

- Overlay files for cpuinfo, meminfo, stat and uptime.

- A cgroupfs compatible tree allowing unprivileged writes.

It's designed to workaround the shortcomings of procfs, sysfs and cgroupfs by exporting files which match what a system container user would expect.

distrobuilder

Image building tool for LXC/LXD:

- Complex image definition as a simple YAML document.

- Multiple output formats (chroot, LXD, LXC).

- Support for a lot of distributions and architectures.

distrobuilder was created as a replacement for the old shell scripts that were shipped as part of LXC to generate images. Its modern design uses pre-built official images whenever available, uses a declarative image definition (YAML) and supports a variety of modifications on the base image.

docker

Docker is software tool chain for managing LXC containers. This seems to be conceptually similar to the way that vSphere vCenter manages a large numbers of ESXi hypervisor instances. In operation it is very different and much more powerful. [1][2]run docker https://docs.docker.com/engine/reference/run/</ref>

- Docker is an open platform for developers and sysadmins to build, ship, and run distributed applications. Consisting of Docker Engine, a portable, lightweight runtime and packaging tool, and Docker Hub, a cloud service for sharing applications and automating workflows, Docker enables apps to be quickly assembled from components and eliminates the friction between development, QA, and production environments. As a result, IT can ship faster and run the same app, unchanged, on laptops, data center VMs, and any cloud. – What Is Docker? An open platform for distributed apps.

- Processes executing in a Docker container are isolated from processes running on the host OS or in other Docker containers. Nevertheless, all processes are executing in the same kernel. Docker leverages LXC to provide separate namespaces for containers, a technology that has been present in Linux kernels for 5+ years and considered fairly mature. It also uses Control Groups, which have been in the Linux kernel even longer, to implement resource auditing and limiting. – Lightweight Linux Containers for Consistent Development and Deployment .

- However, as you spend more time with containers, you come to understand the subtle but important differences. Docker does a nice job of harnessing the benefits of containerization for a focused purpose, namely the lightweight packaging and deployment of applications. Lightweight Linux Containers for Consistent Development and Deployment .

- Docker containers have an API that allow for external adminstration of the containers. ?Core value proposition of Docker.

- Containers have less overhead than VMs (both KVM & ESX) and generally faster than running the same application inside a hypervisor.

- Most Linux applications can run inside a Docker container.

See Setup Docker for installation instructions.

Lifecycle

- docker create ; creates a container but does not start it.

- docker rename ; allows the container to be renamed.

- docker run ; creates and starts a container in one operation.

- docker rm ; deletes a container.

- docker update ; updates a container's resource limits.

Normally if you run a container without options it will start and stop immediately, if you want to keep it running you can use the command, docker run -td container_id this will use the option -t that will allocate a pseudo-TTY session and -d that will detach automatically the container (run container in background and print container ID).

If you want a transient container, docker run --rm will remove the container after it stops.

If you want to map a directory on the host to a docker container, docker run -v $HOSTDIR:$DOCKERDIR. Also see Volumes.

If you want to remove also the volumes associated with the container, the deletion of the container must include the -v switch like in docker rm -v.

There's also a logging driver available for individual containers in docker 1.10. To run docker with a custom log driver (i.e., to syslog), use docker run --log-driver=syslog.

Another useful option is docker run --name yourname docker_image because when you specify the --name inside the run command this will allow you to start and stop a container by calling it with the name the you specified when you created it. Starting and Stopping

- docker start ; starts a container so it is running.

- docker stop ; stops a running container.

- docker restart ; stops and starts a container.

- docker pause ; pauses a running container, "freezing" it in place.

- docker unpause ; will unpause a running container.

- docker wait ; blocks until running container stops.

- docker kill ; sends a SIGKILL to a running container.

- docker attach ; will connect to a running container.

If you want to detach from a running container, use Ctrl + p, Ctrl + q. If you want to integrate a container with a host process manager, start the daemon with -r=false then use docker start -a.

If you want to expose container ports through the host, see the exposing ports section.

Restart policies on crashed docker instances are covered at docker-restart-policies.

CPU Constraints

You can limit CPU, either using a percentage of all CPUs, or by using specific cores.

For example, you can tell the cpu-shares setting. The setting is a bit strange -- 1024 means 100% of the CPU, so if you want the container to take 50% of all CPU cores, you should specify 512. See https://goldmann.pl/blog/2014/09/11/resource-management-in-docker/#_cpu for more:

docker run -it -c 512 agileek/cpuset-test

You can also only use some CPU cores using cpuset-cpus. See https://agileek.github.io/docker/2014/08/06/docker-cpuset/ for details and some nice videos:

docker run -it --cpuset-cpus=0,4,6 agileek/cpuset-test

Note that Docker can still see all of the CPUs inside the container -- it just isn't using all of them. See https://github.com/docker/docker/issues/20770 for more details.

Memory Constraints

You can also set memory constraints on Docker:

docker run -it -m 300M ubuntu:14.04 /bin/bash

Capabilities

Linux capabilities can be set by using cap-add and cap-drop. See https://docs.docker.com/engine/reference/run/#/runtime-privilege-and-linux-capabilities for details. This should be used for greater security.

To mount a FUSE based filesystem, you need to combine both --cap-add and --device:

docker run --rm -it --cap-add SYS_ADMIN --device /dev/fuse sshfs

Give access to a single device:

docker run -it --device=/dev/ttyUSB0 debian bash

Give access to all devices:

docker run -it --privileged -v /dev/bus/usb:/dev/bus/usb debian bash

Information

- docker ps ; shows running containers.

- docker ps -a ; shows running and stopped containers.

- docker logs ; gets logs from container. (You can use a custom log driver, but logs is only available for json-file and journald in 1.10).

- docker inspect ; looks at all the info on a container (including IP address).

- docker events ; gets events from container.

- docker port ; shows public facing port of container.

- docker top ; shows running processes in container.

- docker stats ; shows containers' resource usage statistics.

- docker stats --all ; shows a list of all containers, default shows just running.

- docker diff ; shows changed files in the container's FS.

Import / Export

- docker cp ; copies files or folders between a container and the local filesystem.

- docker export ; turns container filesystem into tarball archive stream to STDOUT.

Executing Commands

- docker exec ; to execute a command in container.

To enter a running container, attach a new shell process to a running container called foo, use: docker exec -it foo /bin/bash.

Images

Images are just templates for docker containers. Lifecycle

- docker images ; shows all images.

- docker import ; creates an image from a tarball.

- docker build ; creates image from Dockerfile.

- docker build --no-cache -t u12_core -f u12_core . ; when you don't want to use the cache to obtain an image

- docker commit ; creates image from a container, pausing it temporarily if it is running.

- docker rmi ; removes an image.

- docker load ; loads an image from a tar archive as STDIN, including images and tags (as of 0.7).

- docker save ; saves an image to a tar archive stream to STDOUT with all parent layers, tags & versions (as of 0.7).

- docker history ; shows history of image.

- docker tag ; tags an image to a name (local or registry).

Cleaning up

While you can use the docker rmi command to remove specific images, there's a tool called docker-gc that will safely clean up images that are no longer used by any containers. As of docker 1.13, docker image prune is also available for removing unused images. See Prune. Load/Save image

- Load an image from file:

docker load < my_image.tar.gz

- Save an existing image:

docker save my_image:my_tag | gzip > my_image.tar.gz

Import/Export container

- Import a container as an image from file:

cat my_container.tar.gz | docker import - my_image:my_tag

- Export an existing container:

docker export my_container | gzip > my_container.tar.gz

Loading an image using the load command creates a new image including its history. Importing a container as an image using the import command creates a new image excluding the history which results in a smaller image size compared to loading an image. Networks

network

Docker has a networks feature. Not much is known about it, so this is a good place to expand the cheat sheet. There is a note saying that it's a good way to configure docker containers to talk to each other without using ports. See working with networks for more details.

- docker network create

- docker network rm

- docker network ls

- docker network inspect

Connection

- docker network connect

- docker network disconnect

You can specify a specific IP address for a container:

- create a new bridge network with your subnet and gateway for your ip block

docker network create --subnet 203.0.113.0/24 --gateway 203.0.113.254 iptastic

- run a nginx container with a specific ip in that block

docker run --rm -it --net iptastic --ip 203.0.113.2 nginx

- curl the ip from any other place (assuming this is a public ip block duh)

curl 203.0.113.2

Registry & Repository

A repository is a hosted collection of tagged images that together create the file system for a container.

A registry is a host -- a server that stores repositories and provides an HTTP API for managing the uploading and downloading of repositories.

Docker.com hosts its own index to a central registry which contains a large number of repositories. Having said that, the central docker registry does not do a good job of verifying images and should be avoided if you're worried about security.

- docker login to login to a registry.

- docker logout to logout from a registry.

- docker search searches registry for image.

- docker pull pulls an image from registry to local machine.

- docker push pushes an image to the registry from local machine.

You can run a local registry by using the docker distribution project and looking at the local deploy instructions.

Dockerfile

The configuration file. Sets up a Docker container when you run docker build on it. Vastly preferable to docker commit.

.dockerignore FROM Sets the Base Image for subsequent instructions. MAINTAINER (deprecated - use LABEL instead) Set the Author field of the generated images. RUN execute any commands in a new layer on top of the current image and commit the results. CMD provide defaults for an executing container. EXPOSE informs Docker that the container listens on the specified network ports at runtime. NOTE: does not actually make ports accessible. ENV sets environment variable. ADD copies new files, directories or remote file to container. Invalidates caches. Avoid ADD and use COPY instead. COPY copies new files or directories to container. By default this copies as root regardless of the USER/WORKDIR settings. Use --chown=<user>:<group> to give ownership to another user/group. (Same for ADD.) ENTRYPOINT configures a container that will run as an executable. VOLUME creates a mount point for externally mounted volumes or other containers. USER sets the user name for following RUN / CMD / ENTRYPOINT commands. WORKDIR sets the working directory. ARG defines a build-time variable. ONBUILD adds a trigger instruction when the image is used as the base for another build. STOPSIGNAL sets the system call signal that will be sent to the container to exit. LABEL apply key/value metadata to your images, containers, or daemons. SHELL override default shell is used by docker to run commands. HEALTHCHECK tells docker how to test a container to check that it is still working.

Examples Best practices for writing Dockerfiles Michael Crosby has some more Dockerfiles best practices / take 2. Building Good Docker Images / Building Better Docker Images Managing Container Configuration with Metadata How to write excellent Dockerfiles

Layers

The versioned filesystem in Docker is based on layers. They're like git commits or changesets for filesystems. Links

Links are how Docker containers talk to each other through TCP/IP ports. Atlassian show worked examples. You can also resolve links by hostname.

This has been deprecated to some extent by user-defined networks.

NOTE: If you want containers to ONLY communicate with each other through links, start the docker daemon with -icc=false to disable inter process communication.

If you have a container with the name CONTAINER (specified by docker run --name CONTAINER) and in the Dockerfile, it has an exposed port:

EXPOSE 1337

Then if we create another container called LINKED like so:

docker run -d --link CONTAINER:ALIAS --name LINKED user/wordpress

Then the exposed ports and aliases of CONTAINER will show up in LINKED with the following environment variables:

$ALIAS_PORT_1337_TCP_PORT $ALIAS_PORT_1337_TCP_ADDR

And you can connect to it that way.

To delete links, use

docker rm --link.

Generally, linking between docker services is a subset of "service discovery", a big problem if you're planning to use Docker at scale in production. Please read The Docker Ecosystem: Service Discovery and Distributed Configuration Stores for more info.

Volumes

Docker volumes are free-floating filesystems. They don't have to be connected to a particular container. You can use volumes mounted from data-only containers for portability. As of Docker 1.9.0, Docker has named volumes which replace data-only containers. Consider using named volumes to implement it rather than data containers.

- docker volume create

- docker volume rm

- docker volume ls

- docker volume inspect

Volumes are useful in situations where you can't use links (which are TCP/IP only). For instance, if you need to have two docker instances communicate by leaving stuff on the filesystem.

You can mount them in several docker containers at once, using docker run --volumes-from.

Because volumes are isolated filesystems, they are often used to store state from computations between transient containers. That is, you can have a stateless and transient container run from a recipe, blow it away, and then have a second instance of the transient container pick up from where the last one left off.

See advanced volumes for more details. Container42 is also helpful.

- You can map MacOS host directories as docker volumes:

docker run -v /Users/wsargent/myapp/src:/src

- You can use remote NFS volumes if you're feeling brave.

- You may also consider running data-only containers as described here to provide some data portability.

- Be aware that you can mount files as volumes.

Exposing ports

Exposing incoming ports through the host container is fiddly but doable.

This is done by mapping the container port to the host port (only using localhost interface) using -p:

docker run -p 127.0.0.1:$HOSTPORT:$CONTAINERPORT --name CONTAINER -t someimage

You can tell Docker that the container listens on the specified network ports at runtime by using EXPOSE:

EXPOSE <CONTAINERPORT>

Note that EXPOSE does not expose the port itself -- only -p will do that. To expose the container's port on your localhost's port:

iptables -t nat -A DOCKER -p tcp --dport <LOCALHOSTPORT> -j DNAT --to-destination <CONTAINERIP>:<PORT>

If you're running Docker in Virtualbox, you then need to forward the port there as well, using forwarded_port. Define a range of ports in your Vagrantfile like this so you can dynamically map them:

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

...

(49000..49900).each do |port|

config.vm.network :forwarded_port, :host => port, :guest => port

end

...

end

If you forget what you mapped the port to on the host container, use docker port to show it:

docker port CONTAINER $CONTAINERPORT

Best Practices

This is where general Docker best practices and war stories go:

- The Rabbit Hole of Using Docker in Automated Tests

- Bridget Kromhout has a useful blog post on running Docker in production at Dramafever.

- Building a Development Environment With Docker

- Discourse in a Docker Container

Docker-Compose

Compose is a tool for defining and running multi-container Docker applications. With Compose, you use a YAML file to configure your application’s services. Then, with a single command, you create and start all the services from your configuration. To learn more about all the features of Compose, see the list of features.

By using the following command you can start up your application:

docker-compose -f <docker-compose-file> up

You can also run docker-compose in detached mode using -d flag, then you can stop it whenever needed by the following command:

docker-compose stop

You can bring everything down, removing the containers entirely, with the down command. Pass --volumes to also remove the data volume. Security

This is where security tips about Docker go. The Docker security page goes into more detail.

First things first: Docker runs as root. If you are in the docker group, you effectively have root access. If you expose the docker unix socket to a container, you are giving the container root access to the host.

Docker should not be your only defense. You should secure and harden it.

For an understanding of what containers leave exposed, you should read Understanding and Hardening Linux Containers by Aaron Grattafiori. This is a complete and comprehensive guide to the issues involved with containers, with a plethora of links and footnotes leading on to yet more useful content. The security tips following are useful if you've already hardened containers in the past, but are not a substitute for understanding. Security Tips

For greatest security, you want to run Docker inside a virtual machine. This is straight from the Docker Security Team Lead -- slides / notes. Then, run with AppArmor / seccomp / SELinux / grsec etc to limit the container permissions. See the Docker 1.10 security features for more details.

Docker image ids are sensitive information and should not be exposed to the outside world. Treat them like passwords.

See the Docker Security Cheat Sheet by Thomas Sjögren: some good stuff about container hardening in there.

Check out the docker bench security script, download the white papers.

Snyk's 10 Docker Image Security Best Practices cheat sheet

You should start off by using a kernel with unstable patches for grsecurity / pax compiled in, such as Alpine Linux. If you are using grsecurity in production, you should spring for commercial support for the stable patches, same as you would do for RedHat. It's $200 a month, which is nothing to your devops budget.

Since docker 1.11 you can easily limit the number of active processes running inside a container to prevent fork bombs. This requires a linux kernel >= 4.3 with CGROUP_PIDS=y to be in the kernel configuration.

docker run --pids-limit=64

Also available since docker 1.11 is the ability to prevent processes from gaining new privileges. This feature have been in the linux kernel since version 3.5. You can read more about it in this blog post.

docker run --security-opt=no-new-privileges

From the Docker Security Cheat Sheet (it's in PDF which makes it hard to use, so copying below) by Container Solutions:

Turn off interprocess communication with:

docker -d --icc=false --iptables

Set the container to be read-only:

docker run --read-only

Verify images with a hashsum:

docker pull debian@sha256:a25306f3850e1bd44541976aa7b5fd0a29be

Set volumes to be read only:

docker run -v $(pwd)/secrets:/secrets:ro debian

Define and run a user in your Dockerfile so you don't run as root inside the container:

RUN groupadd -r user && useradd -r -g user user USER user

User Namespaces

There's also work on user namespaces -- it is in 1.10 but is not enabled by default.

To enable user namespaces ("remap the userns") in Ubuntu 15.10, follow the blog example. Security Videos

Using Docker Safely Securing your applications using Docker Container security: Do containers actually contain? Linux Containers: Future or Fantasy?

Security Roadmap

The Docker roadmap talks about seccomp support. There is an AppArmor policy generator called bane, and they're working on security profiles. Tips

Sources:

15 Docker Tips in 5 minutes CodeFresh Everyday Hacks Docker

Prune

The new Data Management Commands have landed as of Docker 1.13:

- docker system prune

- docker volume prune

- docker network prune

- docker container prune

- docker image prune

df

docker system df presents a summary of the space currently used by different docker objects. Heredoc Docker Container

docker build -t htop - << EOF FROM alpine RUN apk --no-cache add htop EOF

cheats

Last Ids

alias dl='docker ps -l -q' docker run ubuntu echo hello world docker commit $(dl) helloworld

Commit with command (needs Dockerfile)

docker commit -run='{"Cmd":["postgres", "-too -many -opts"]}' $(dl) postgres

Get IP address

docker inspect $(dl) | grep -wm1 IPAddress | cut -d '"' -f 4

or with jq installed:

docker inspect $(dl) | jq -r '.[0].NetworkSettings.IPAddress'

or using a go template:

docker inspect -f '{{ .NetworkSettings.IPAddress }}' <container_name>

or when building an image from Dockerfile, when you want to pass in a build argument:

DOCKER_HOST_IP=`ifconfig | grep -E "([0-9]{1,3}\.){3}[0-9]{1,3}" | grep -v 127.0.0.1 | awk '{ print $2 }' | cut -f2 -d: | head -n1`

echo DOCKER_HOST_IP = $DOCKER_HOST_IP

docker build \

--build-arg ARTIFACTORY_ADDRESS=$DOCKER_HOST_IP

-t sometag \

some-directory/

Get port mapping

docker inspect -f '{{range $p, $conf := .NetworkSettings.Ports\}} \{{$p\}} -> \{{(index $conf 0).HostPort\}} \{{end\}}' <containername>

Find containers by regular expression

for i in $(docker ps -a | grep "REGEXP_PATTERN" | cut -f1 -d" "); do echo $i; done

Get Environment Settings

docker run --rm ubuntu env

Kill running containers

docker kill $(docker ps -q)

Delete all containers (force!! running or stopped containers)

docker rm -f $(docker ps -qa)

Delete old containers

docker ps -a | grep 'weeks ago' | awk '{print $1}' | xargs docker rm

Delete stopped containers

docker rm -v $(docker ps -a -q -f status=exited)

Delete containers after stopping

docker stop $(docker ps -aq) && docker rm -v $(docker ps -aq)

Delete dangling images

docker rmi $(docker images -q -f dangling=true)

Delete all images

docker rmi $(docker images -q)

Delete dangling volumes as of Docker 1.9:

docker volume rm $(docker volume ls -q -f dangling=true)

Show image dependencies

docker images -viz | dot -Tpng -o docker.png

Slimming down Docker containers

Cleaning APT in a RUN layer

This should be done in the same layer as other apt commands. Otherwise, the previous layers still persist the original information and your images will still be fat.

RUN {apt commands} \

&& apt-get clean \ && rm -rf /var/lib/apt/lists/* /tmp/* /var/tmp/*

Flatten an image

ID=$(docker run -d image-name /bin/bash) docker export $ID | docker import – flat-image-name

For backup

ID=$(docker run -d image-name /bin/bash) (docker export $ID | gzip -c > image.tgz) gzip -dc image.tgz | docker import - flat-image-name

Monitor system resource utilization for running containers

To check the CPU, memory, and network I/O usage of a single container, you can use:

docker stats <container>

For all containers listed by id:

docker stats $(docker ps -q)

For all containers listed by name:

docker stats $(docker ps --format '{{.Names}}')

For all containers listed by image:

docker ps -a -f ancestor=ubuntu

Remove all untagged images:

docker rmi $(docker images | grep “^” | awk '{split($0,a," "); print a[3]}')

Remove container by a regular expression:

docker ps -a | grep wildfly | awk '{print $1}' | xargs docker rm -f

Remove all exited containers:

docker rm -f $(docker ps -a | grep Exit | awk '{ print $1 }')

Volumes can be files. Be aware that you can mount files as volumes. For example you can inject a configuration file like this:

- copy file from container

docker run --rm httpd cat /usr/local/apache2/conf/httpd.conf > httpd.conf

- edit file

vim httpd.conf

- start container with modified configuration

docker run --rm -it -v "$PWD/httpd.conf:/usr/local/apache2/conf/httpd.conf:ro" -p "80:80" httpd

openshift

openshift is the redhat container system [3] that runs on kubernetes[4].

Container Orchestration

- http://decking.io/

- https://github.com/newrelic/centurion

- https://github.com/GoogleCloudPlatform/kubernetes

Kubernetes

Why use Kubernetes?

Kubernetes[4] has become the standard orchestration platform for containers. All the major cloud providers support it, making it the logical choice for organizations looking to move more applications to the cloud.

Kubernetes provides a common framework to run distributed systems so development teams have consistent, immutable infrastructure from development to production for every project. Kubernetes can manage scaling requirements, availability, failover, deployment patterns, and more.

Kubernetes’ capabilities include:

- Service and process definition

- Service discovery and load balancing

- Storage orchestration

- Container-level resource management

- Automated deployment and rollback

- Container health management

- Secrets and configuration management

What are the advantages of Kubernetes?

Kubernetes has many powerful and advanced capabilities. For teams that have the skills and knowledge to get the most of it, Kubernetes delivers:

- Availability. Kubernetes clustering has very high fault tolerance built-in, allowing for extremely large scale operations.

- Auto-scaling. Kubernetes can scale up and scale down based on traffic and server load automatically.

- Extensive Ecosystem. Kubernetes has a strong ecosystem around Container Networking Interface (CNI) and Container Storage Interface (CSI) and inbuilt logging and monitoring tools.

- active community

However, Kubernetes’ complexity is overwhelming for a lot of people jumping in for the first time. The primary early adopters of Kubernetes have been sophisticated, tribal sets of developers from larger scale organizations with a do-it-yourself culture and strong independent developer teams with the skills to “roll their own” Kubernetes.

As the mainstream begins to look at adopting Kubernetes internally, this approach is often what is referenced in the broader community today. But this approach may not be right for every organization.

While Kubernetes has advanced capabilities, all that power comes with a price; jumping into the cockpit of a state-of-the-art jet puts a lot of power under you, but how to actually fly the thing is not obvious.

- documentation https://kubernetes.io/docs/home/

Metrics

Docker being an LXC based containerisation uses cgroups for isolation, and thus metrics may be obtained from the corresponding files within a container's cgroup.

- See https://www.docker.com/blog/gathering-lxc-docker-containers-metrics/

- network inteface metrics,try executing the command in the namespace of the container

ip netns exec <nsname> <command...>

- e.g.

ip netns exec mycontainer netstat -i

namespaces and cgroups

See media:nginx-namespaces.pdf.

the fight

There is an ongoing fight between redhat and docker over container initialisation and systemd [5]

References

- ↑ https://www.linuxjournal.com/content/docker-lightweight-linux-containers-consistent-development-and-deployment?page=0,1

- ↑ Setup Docker

- ↑ openshift https://docs.openshift.com/container-platform/3.5/architecture/index.html

- ↑ 4.0 4.1 kubernetes https://kubernetes.io/

- ↑ systemd initialisation and docker https://lwn.net/Articles/676831/

- 4. IRODs